“Deep Learning” Rises to the Surface at MBL

“As an inaugural course, you’ve made history at MBL!” said Education Director Linda Hyman to the participants in the first “Deep Learning for Microscopy Image Analysis” course, held this fall.

Aligning with MBL’s other research training courses, where students tackle real, challenging problems in a scientific field, the “DL@MBL” students knew little about Deep Learning when they arrived – but could apply it to their own imaging data when they left.

“Everyone is learning so much – the faculty and teaching assistants as well as the students,” said Arlo Sheridan of the Salk Institute, a TA for the course. “It’s been amazing.”

The 2021 Deep Learning course participants. The students (alphabetically) are Shruthi Bandyadka, Benjamin Asher Barad, Sayantanee Biswas, Kenny Kwok Hin Chung, Steven Del Signore, Luke Funk, Samuel Gonzalez, Hope Meredith Healey, Anh Phuong Le, Matthew Loring, Nagarajan Nandagopal, Abigail Carson Neininger, Leti Nunez, Augusto Ortega Granillo, Puebla Ramirez, Tabita Shamayim, Xiongtao Ruan, Gabriela Salazar Lopez, Suganya Sivagurunathan, Benjamin Sterling, Yuqi Tan, Linh Thi Thuy Trinh, Shixing Wang, Xiaolei Wang, Zachary Whiddon. Teaching Assistants: Mehdi Azabou, Caroline Malin-Mayor, William Patton, Morgan Schwartz, Arlo Sheridan, Steffen Wolf. Faculty: Jan Funke, David van Valen. Not shown but present: teaching assistants Larissa Heinrich and Tri Nguyen; faculty Shalin Mehta.

The 2021 Deep Learning course participants. The students (alphabetically) are Shruthi Bandyadka, Benjamin Asher Barad, Sayantanee Biswas, Kenny Kwok Hin Chung, Steven Del Signore, Luke Funk, Samuel Gonzalez, Hope Meredith Healey, Anh Phuong Le, Matthew Loring, Nagarajan Nandagopal, Abigail Carson Neininger, Leti Nunez, Augusto Ortega Granillo, Puebla Ramirez, Tabita Shamayim, Xiongtao Ruan, Gabriela Salazar Lopez, Suganya Sivagurunathan, Benjamin Sterling, Yuqi Tan, Linh Thi Thuy Trinh, Shixing Wang, Xiaolei Wang, Zachary Whiddon. Teaching Assistants: Mehdi Azabou, Caroline Malin-Mayor, William Patton, Morgan Schwartz, Arlo Sheridan, Steffen Wolf. Faculty: Jan Funke, David van Valen. Not shown but present: teaching assistants Larissa Heinrich and Tri Nguyen; faculty Shalin Mehta.Deep Learning, a type of machine learning, may sound exotic, but it’s quickly become a gold standard approach for making sense of the huge amount of imaging data that modern microscopes can generate.

“Imaging the neurons in one, small fruit fly brain can generate 500 terabytes of data. Just looking at all those images would take 60 years of your life without sleeping, without going on vacation,” says Jan Funke of HHMI Janelia Research Campus, co-director of DL@MBL. “That would be very painful!”

Find Me All Images of Cats

Inspired by the structure of the human brain, Deep Learning uses computer models called “deep neural networks” to analyze data, in this case enormous volumes of biological images.

The scientist first has to train the model to produce a desired output. If they want it to detect images of cats, for instance, they will feed the model hundreds, thousands or millions of images in which cats have been “painted,” or annotated, as well as images with no cats. After many iterations of training, the model will be able to correctly predict cats in data it’s never seen before.

“It’s quite surprising, actually, how well it works. And it’s fair to say we don’t really understand how it works,” Funke says. “It has something to do with the layers inside the network. Just as in our brains, where neurons are organized in different layers [the visual cortex has six layers, for example, where depth perception, color, motion, etc., are processed], deep neural networks keep forwarding information from one layer to the next. The more layers the network has, the more correlations it makes between your input data and whatever you’ve trained it the output should be. And that makes it successful.”

Deep Learning models can be trained to classify images (i.e., cats or no cats), detect objects within images (only cats with long fur), track objects over time (cats running), and clean up blurred or noisy images, among other functions that would be extremely tedious or impossible to accomplish by visual analysis.

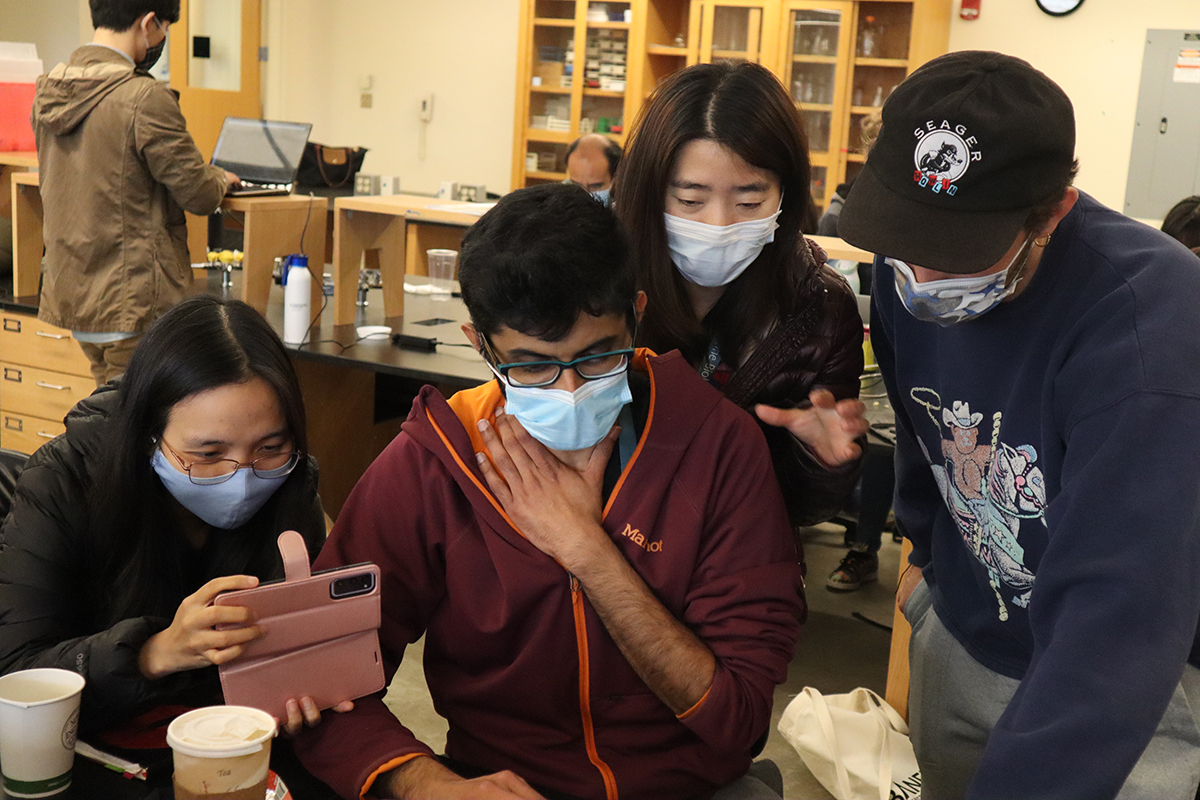

Members of the “Instance Segmentation” group are thrilled to see the neural network they had trained perform successfully. Left to right: Students Anh Phuong Le (Harvard Medical School), Sandy Nandagopal (Harvard Medical School), Xiaolei Wang (Duke University) and teaching assistant Arlo Sheridan (Salk Institute). Credit: Diana Kenney

Members of the “Instance Segmentation” group are thrilled to see the neural network they had trained perform successfully. Left to right: Students Anh Phuong Le (Harvard Medical School), Sandy Nandagopal (Harvard Medical School), Xiaolei Wang (Duke University) and teaching assistant Arlo Sheridan (Salk Institute). Credit: Diana KenneyThe 24 students, most of them biologists, were asked to bring their own imaging data to the course. After five days of lectures and exercises in Deep Learning concepts, methods and tools, the students worked closely with faculty and TAs to apply their new skills to their own data.

“We identified promising datasets and grouped students with similar questions together,” Funke says. “Not all of the datasets were ready to be used for Deep Learning, which requires a lot of annotated training examples. So, a few groups worked with publicly available datasets that were similar to their own, so they can apply the methods once their own data is ready for training.”

(Neural) Networking for Success

One afternoon in Loeb Laboratory, DL@MBL looked as intense and exciting as any MBL course. The students were clustered in groups, cramming to prepare their data to present in the course’s final symposium. Suddenly a wave of excitement rippled through the self-named “Instance Gratification” group.

“Thirty minutes before their presentation, they got the neural network to do exactly what they wanted it to do!” crowed Sheridan.

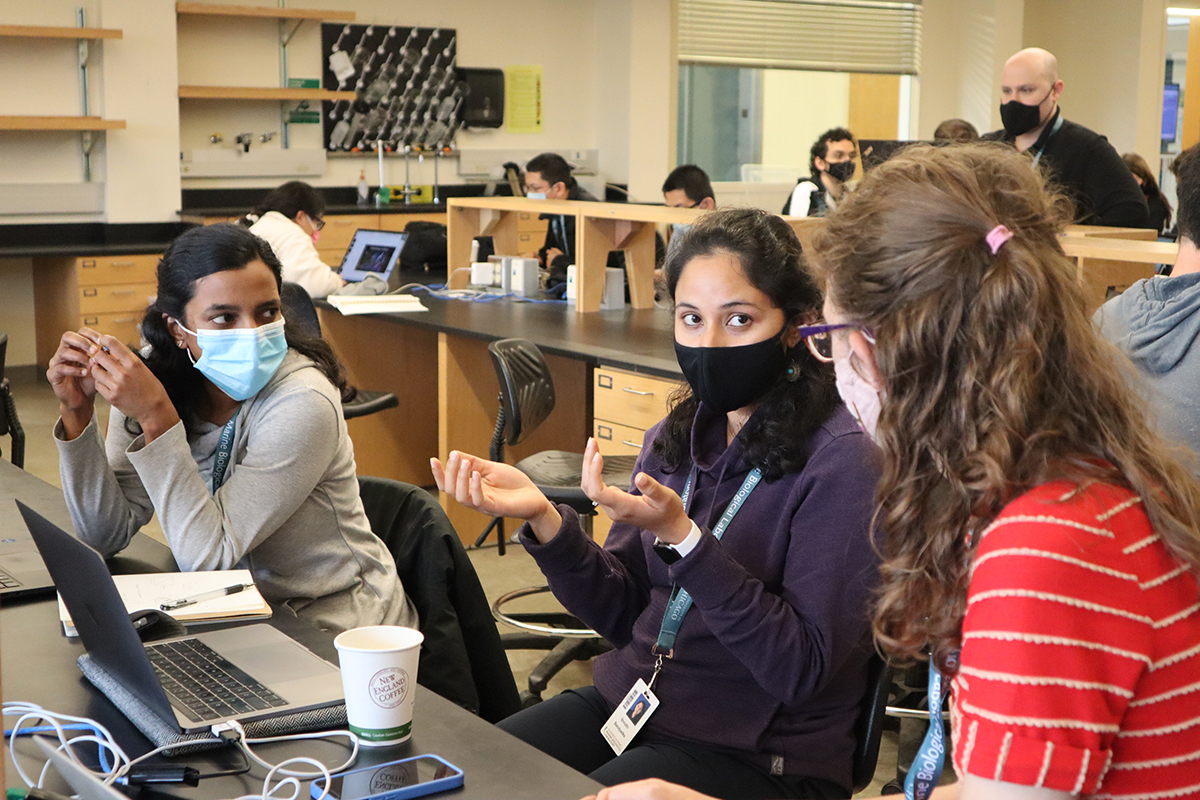

Members of the team that classified cell cycle phases in their image dataset. Left to right: Suganya Sivagurunathan (Northwestern University), Shruthi Bandyakda (Boston University) and Hope Healey (University of Oregon). Credit: Diana Kenney

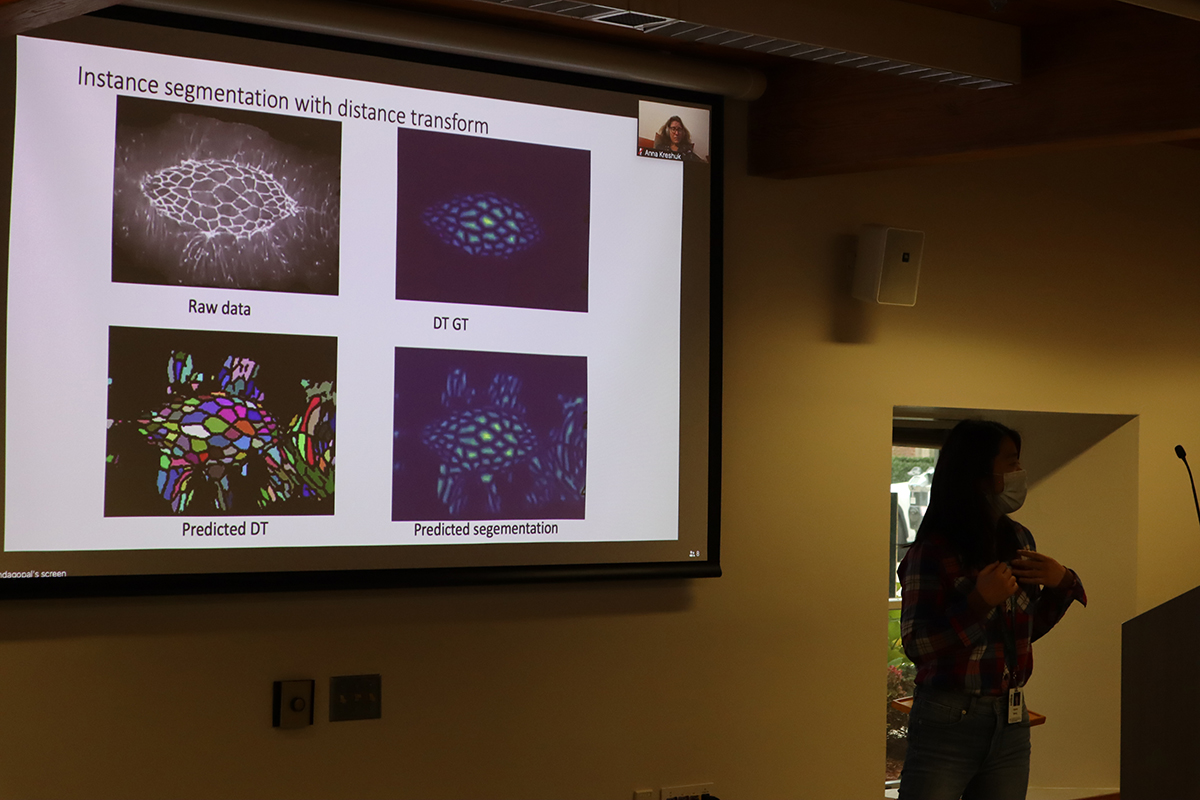

Members of the team that classified cell cycle phases in their image dataset. Left to right: Suganya Sivagurunathan (Northwestern University), Shruthi Bandyakda (Boston University) and Hope Healey (University of Oregon). Credit: Diana KenneyOne of the students, Xiaolei Wang from Duke University, had brought movies of Drosophila epithelial cells growing and shrinking during morphogenesis, along with 100 annotated “ground truth” images of these cells and of another type of cell. Together with Sheridan and the rest of her group, they used the ground-truth images to train a deep neural network to recognize the difference between the two kinds of cells (called semantic segmentation), and assign each cell a unique label (instance segmentation). They went through 50,000 iterations of training, which took about 4 hours. What happened at the 11th hour is just what they hoped: The model successfully segmented the epithelial cells and tracked them over time. (The key was to run the model in 2D for each step in time, and then connect the labels between time sets to derive a final 3D segmentation.)

“There’s a million different directions they can go now,” Sheridan said. “In their heads they are going, ‘Oh, now I want to segment everything!’”

Another group trained a neural network to classify cells according to which phase of the cell cycle they were in. And the “Pumpkin Spice” group trained a neural network to detect branch-like structures in imaging data, such as vasculature in the retina or long, filamentous mitochondria.

“The project phase of the course was definitely its most successful aspect, compared with our pilot course in 2019,” Funke said. Sheridan agreed. “When the students apply what they learn to their own data – stuff they care about -- after they go home they can apply it and also teach it to other people,” he said.

“I came to MBL with one problem and got 10 problems to bring back. But I can't believe I was with zero background on Deep Learning and now can actually write and run a model!” wrote student Anh Phuong Le on Twitter.

The pandemic restricted many of the faculty’s attendance to virtual, but they hope next year to be on the ground in Woods Hole.

“It was horrible to be on my couch instead of in the midst of all of you!” said course co-director Florian Jug of Human Technopole in Milan, Italy. “For a lecture, remote teaching is OK, but to guide the students beyond a 90-minute session, you really need to be on-site,” he said. Course co-director Anna Kreshuk of EMBL Heidelberg also looks forward to in-person attendance at the 2022 course, to begin in late August.

The costs of participating in the Deep Learning course were generously provided by the Howard Hughes Medical Institute, the Chan Zuckerberg Initiative, and the National Center for Brain Mapping.